FIT CTU

Adam Vesecký

vesecky.adam@gmail.com

Lecture 6

Audio

Architecture of Computer Games

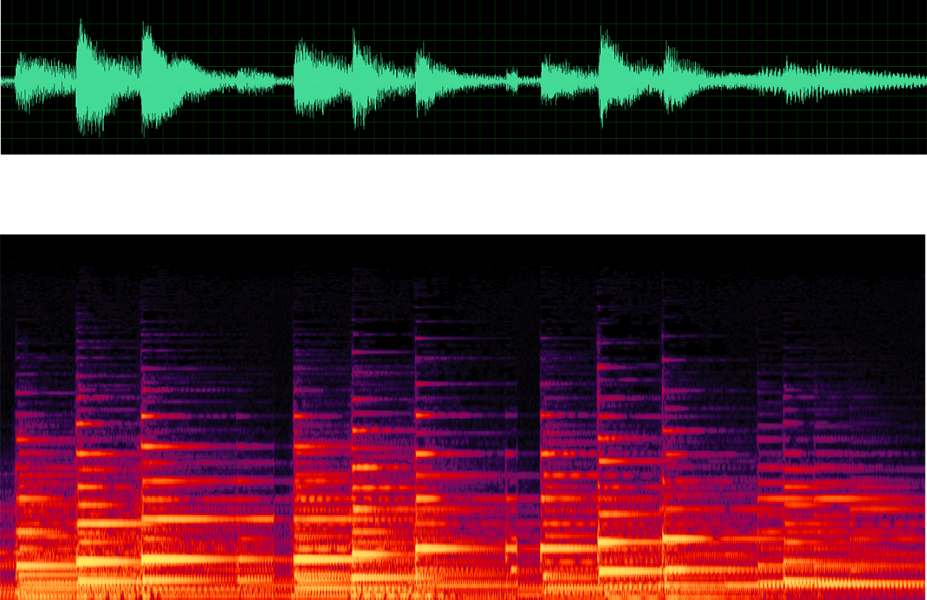

Digital Sound

PCM

- pulse-code modulation, a method used to digitally represent sampled analog signals

- sample - fundamental unit, representing the amplitude of an audio signal in time

- bit depth - each bit of resolution is equal to 6dB of dynamic range

- sample rate - number of samples per second: 8 kHz, 22.05 kHz, 44.1 kHz, 48 kHz

- frequency - a measure of the number of pulses in a given space of time

- frame - collection of samples, one for each output (e.g. 2 for stereo)

- buffer - collection of frames in memory, typically 64-4096 samples per buffer

- the greater the buffer, the greater the latency, but less strain being placed on the cpu

Bit depth and sample rate

Sound formats

Time Domain formats

- better for random access

- sound samples and effects

- PCM, WAV, FLAC

Frequency Domain formats

- better for compression

- music and voices

- MP3, OGG Vorbis

Synth formats

- contain notes, effects,...

- old trackers: MOD, S3M, XM, IT

- MIDI for streaming (e.g. from electronic piano)

- proprietary formats of various audio editors

- mostly used for pre-production

Sound formats

History of digital sound

History of digital sound

- before 1980 - simple programmable sound generators

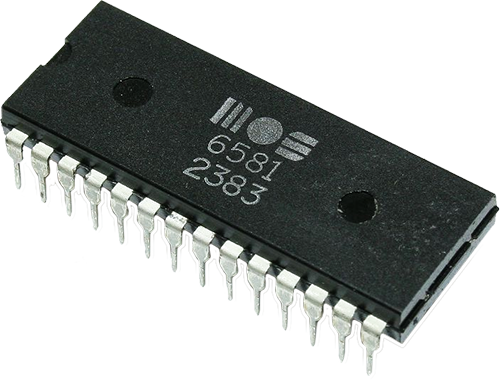

- 1981 - Commodore MOS 6581, rise of demoscene

- 1985 - Yamaha YM3812 (OPL2), FM synth widely used in DOS games

- 1987 - Amiga Ultimate Soundtracker, rise of trackers

- 1989 - Sound Blaster 1.0 - IBM PC gets PCM sounds

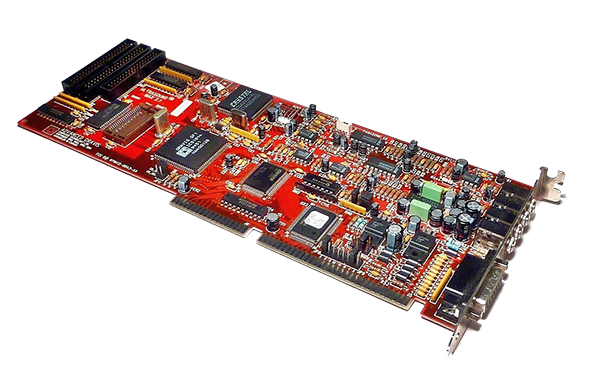

- 1992 - Gravis Ultrasound - boosted MIDI music with wavetables

- 1996 - DirectSound - rise (and fall) of hardware-accelerated sound in games

- 1996 - VST plugins - pushed limits of synthetic music

- 1997 - Realtek ALC5611 - rise of on-board chips for audio

- 1998 - Sound Blaster Live! - Creative Labs takes the lead (for the last time)

- 2004 - High Definition Audio - new specification for PC audio

- 2005 - sound processing goes back to software (thanks to multicore CPUs)

- 2012 - Dolby Atmos - technology for 3D sound

- 2017 - NVidia VRWorks, rise of GPU-powered sound processing

Sound synthesizing, recording and composing

Sound generators

- generator - oscillator that can make a tone, either independently or by pairing with another generator

- synth music is a combination of generated tones, effects, filters, and recorded sound samples

- main synthesis types: additive, subtractive, FM, wavetable

ADSR Envelope

- envelope describes a change of a sound over time

- attack - time it takes for the sound to go from silent to the loudest level

- decay - how long it takes for the sound to go from the initial peak to the steady state

- sustain - steady state at its maximum intensity (until the source stops)

- release - time it takes for the sound to return to silence (fade out)

Additive Synthesis

- creates waveforms with only specific harmonics

- best for organs and bell sounds

Circuit abbreviations

- VCA - amplifier

- VCO - oscillator

- VCF - filter

- EG - envelope generator

- LFO - low frequency oscillator, used for vibrato, tremolo etc.

Subtractive Synthesis

- starts with a sound that is rich in harmonics and applies filters to attenuate or subtract frequencies

- filters control cut-off frequency and resonance

- sound is warm and organic

- dry signal - original sound

- wet signal - processed sound (reflections, reverberations)

FM Synthesis

- gets a clean tone and uses operators to modulate the pitch of the oscillator

- consists of an array of operators

- each FM sound needs two generators - carrier and modulator

- carrier - tones you hear

- modulator - tones that vibrate the carriers

- cold, alien-like sound

Wavetable Synthesis

- wavetable - a collection of wave shapes in a single oscillator, usually 50ms of length

- realistic but requires more RAM

- can generate complex sound

Effects

- Echo - delayed signals of 50ms or more

- Reverberation (Reverb) - reflection of sound waves off a solid surface, used for in-door environments

- Chorus - delayed sound added to original with constant delay, adds depth

- Time stretching - altering the speed without adjusting the pitch

- Compressor - reduces the dynamic range of a sound, make loud sounds quieter and quite louder

- Equalization - attenuating or amplifying various frequency bands

- Filtering - specific frequency ranges can be emphasized or attenuated

- Lowpass/highpass - filter that cuts off high or low frequencies

- Portamento - gradually increases pitch

- Vibrato - a regular, pulsating change of pitch

- Tremolo - variation in amplitude (volume)

- Panning - changing the volume for respective channels

MOS Technology - SID

- SID (Sound Interface Device) - built-in programmable sound generator

- subtractive synthesizer, invented in 1981 for Commodore C64

- one of the first sound chips of its kind

- ADSR, 3 programmable audio oscillators, 4 waveforms (sawtooth, triangle, pulse, noise)

Other HW-based synthesizers

Atari 800XL

- POKEY chip

- 4 semi-independent audio channels

IBM PC Speaker

- 1-bit on-board generator, square waves

Nintendo Entertainment System

- 2 pulse waves, triangle, noise and PCM audio

Yamaha YM3812

- sound chip created by Yamaha in 1985, used in IBM PC sound cards

- 9 channels of sound, each made of 2 oscillators

Game boy Advance

- 4 sound channels (square, wave, wave table, noise) + 2 DAC channels

- PCM samples, 32 kHz sampling

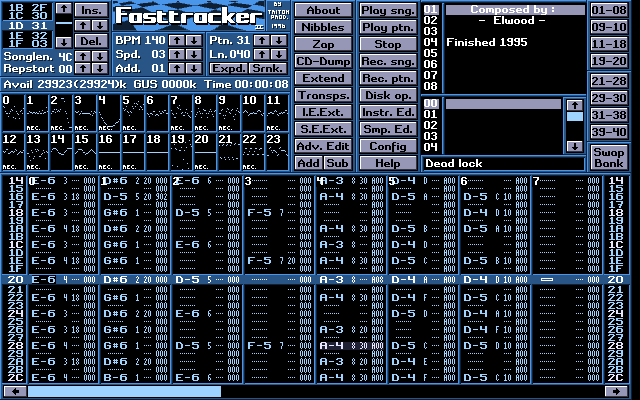

Trackers

- set of tools for wavetable synthesis

- demoscene and cracktro makers heavily used trackers for their soundtracks

- each channel has a vertical lane, with the vertical axis representing time

- songs are constructed from patterns, each pattern has a set of notes, played by instruments

- notes are generated from samples

- 1987 - Ultimate SoundTracker for Amiga, *.MOD file format

- 1994 - Scream Tracker for MS-DOS, *.S3M file format

- 1994 - Fast Tracker 2 for MS-DOS, *.XM file format

- 1995 - Impulse Tracker for MS-DOS, *.IT file format

- http://modarchive.org/ - archive of MOD files

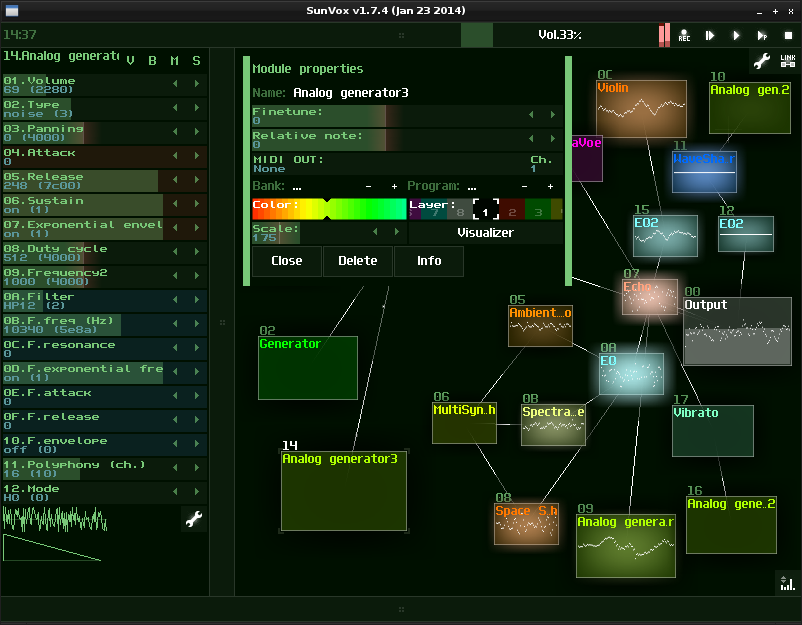

- trackers used nowadays: SunVox, OpenMPT, Renoise

Fast Tracker 2

Example: Sunvox

MIDI

- streaming protocol, introduced in 1982

- enables different manufacturers to trigger events by sending messages on a serial connection

- midi is not audio but it can control audio

- General MIDI - a way of ensuring that every device has the exact same basic sounds

- MIDI doesn't contain samples, only notes and commands, thus it requires sound fonts

- MIDI sounds different when played on different SW/HW (big issue in 1990's)

- old game soundtracks can be played at high quality by using good sound fonts (FluidSynth)

VST plugins

- Visual Studio Technology

- interface for digital audio processing

- can be integrated in almost every DAW (digital audio workstation)

- can simulate basically everything - used as effects, converters, filters, virtual instruments,...

Sound recording

- ambient sounds - directly in the environment, using a good quality microphone

- synthetic sound effects - DAW software (FLStudio, Audacity,...) and a set of VLC plugins

- real sound effects - by foley artists

Music composing

- either real orchestra, synth music or combination of both

- many composing techniques: chords-first, melody-first, structure-first

Scales

- major scale - happy scale

- minor scale - sad scale (natural, harmonic, melodic)

Keys

- most songs are in a Key

- key is a specific group of pitches that follow a scale

- melody shaping - moving away from the starting note and back

Bassline

- bottom notes of chords

- we can build chords from basslines

- e.g. CGFA basslines gives chords CEG, GBD, FAC, ACE

EastWest Symphonic Orchestra

Music in games

Music in games

Genre

- chiptune (pixel-art)

- electronic music (arcades, shoot-em-ups)

- instrumental music (games with a story)

Presentation

- character-based - focuses on characters (usually narrative games)

- environment-based - games with diverse locations

- emotional-based - games with strong story, music invokes feelings

Usage

- to reflect the game state

- to reflect the environment

- to reflect the story

- to alert the player about an event

Music in games

Monkey Island 2: LeChuck's Revenge (1991)

- iMUSE engine for smooth transitions between locations

The Legend of Zelda: Ocarina of Time (1998)

- important role of musical themes (e.g. Saria's Theme)

F.E.A.R (2005)

- reactive music tailored to each individual event

League of Legends (2009)

- looping score and many in-game alerts

Mass Effect 3 (2012)

- epic emotional soundtrack

Hellblade: Senua's Sacrifice (2017)

- uses binaural audio to create a horrifying experience

Music as the core mechanic

Tiles Hop

Guitar Hero

Dance Dance Revolution

Music in games

Assets

- sound cues - collection of audio clips with metadata

- sound banks - package of sound clips and cues (e.g. all voices of one person)

Asset categories

- diegetic - visible (character voices, sounds of objects, footsteps)

- non-diegetic sound - invisible (sountrack, narrator)

- background music - ambient music (e.g. river)

- score - soundtrack, is clearly recognizable

- interface music - button press

- custom music (e.g. GTA radio)

- alert - music triggered by an event

Dynamics

- linear audio - only reflects major changes (e.g. finished level)

- dynamic audio - changes in response to changes in the environment or gameplay

- adaptive audio - responses to the gameplay, adapts itself based on game attributes

Example: Linear audio

Doom 2

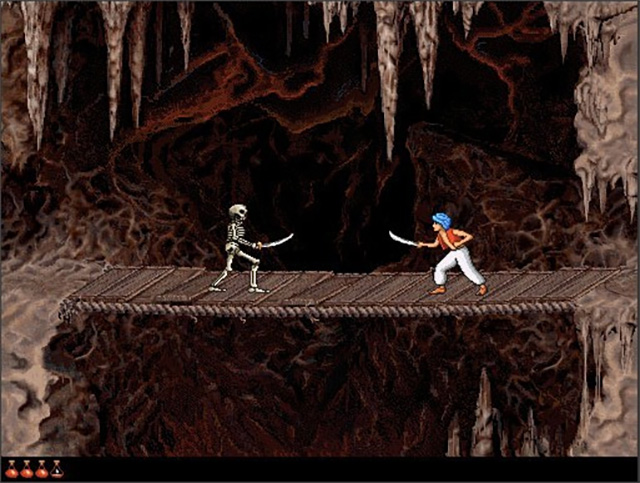

Prince of Persia 2: Shadow and the flame

Dynamic music

Features

- looping - going around and around

- branching - conditional music

- layering - some pieces can be muted on and off and re-combined

- transitions - moving smoothly from one cue to another

Looping

- experienced players will detect the looping point!

- we need to find out how much time the player spends during each session - gameplay time

- the length of the music should extend the gameplay time to avoid repetition

- you don't know how long the player will stay there

- you don't know if the player moves fast or slow

- 2 repetitions are acceptable, 5 and more are annoying

Looping solutions

Looped composition

- beginning and end are seamlessly connected

- challenge - making the loop pleasing to the ear for longer periods

Switching keys

- we may play the melody in a different key

- enhancing the familiarity without repeating it exactly as before

Divided song

- divide the song into parts, loop only certain parts, don't start at the beginning

- lower feeling of repetition

Looping solutions

Mute the melody

- you can increase the number of loops while muting the melody

- the music can lose impact

Silence

- no undesirable effects

- reintroduction of the music may become annoying

Layered patterns

- divide the song into layers and introduce looping separately for each

- greater diversity

- may result in undesirable melodies

Transitions

- moving from one cue to another one

- never stop anything instantly, it may disrupt the gameplay experience

Cross-fade

- simple solution, one song fades out and another one fades in

- not very good for instrumental music

Transition cue

- every song has a separate transition cue

Transition points

- every song has several points at which the transition may happen

- difficult to implement

iMUSE

- dynamic audio for SCUMM engine

- developed by LucasArts in 1991

- database of musical sequences contained decision points within the tracks

- the segments could change, be enabled or disabled, loop, converge, branch, jump etc.

Monkey Island 2

Disruptive audio

- bad mood

- bad looping

- bad transitions

- bad mixing (e.g. ambient sound that superimposes a dialog)

- repetitive NPC voices

- little variation (same music for different levels, one sound for footsteps, explosions etc.)

- false alarm

- too many sounds playing at the same time

Adaptive music

- dynamic music reflects changes in the gameplay, but still follows pre-scripted patterns

- adaptive music is more diverse, it supports emergent gameplay

Variability options

- variable tempo

- variable pitch

- variable rhythm

- variable volume

- variable DSP/timbres

- variable melodies

- variable harmony (chordal arrangements)

Ape Out (2019) - reactive music system

Sounds in games

Sound effects

- the illusion of being immersed in a 3D atmosphere is greatly enhanced by sounds

- 2D games - main focus is the music, sound effects are usually simple

- 3D games - complex positional sounds

Types

- one-shot - always the same (barks, clicks)

- buzzers - e.g. humming, sound of tube lights

- moving objects - e.g. doppler effect for cars

- random - e.g. a set of sounds for explosions

- periodic - e.g. waterfall

- dynamic - e.g. walking on various surfaces

3D Sound

Attenuation

- the further the sound, the quieter it is

Occlusion

- how sound is occluded by solid objects, losing its high frequency

Obstruction

- when the direct path to a sound is muffled but not enclosed

- creates delays

3D Sound

Panning

- changing the channel based on the location of the source

HRTF

- transfer function which models sound perception with two ears to determine positions of the source

- describes how a given sound wave input is filtered by the diffraction properties of the head

- can be used to generate binaural sound

Ambisonics

- full-sphere surround sound format, covers sources

above and below the listener

Raytracing

- physically precise sound rendering, used in VRWorks

Audio APIs

Audio API

- in sharp contrast with the game loop, audio loop depends on sample rate and buffer size

- audio engines use double buffer - we are feeding one while the other is playing

- callback API - OS-level thread calls into user code when a buffer is available (e.g. CoreAudio for MacOS)

- polling API - user must periodically check if the buffer is available (e.g. Windows WASAPI)

Audio Engine

Example: Godot audio loop

| 1 | OSStatus AudioDriverCoreAudio::output_callback(...) { |

| 2 | AudioDriverCoreAudio *ad = (AudioDriverCoreAudio *)inRefCon; |

| 3 | ... |

| 4 | for (unsigned int i = 0; i < ioData->mNumberBuffers; i++) { |

| 5 | AudioBuffer *abuf = &ioData->mBuffers[i]; |

| 6 | unsigned int frames_left = inNumberFrames; |

| 7 | int16_t *out = (int16_t *)abuf->mData; |

| 8 | |

| 9 | while (frames_left) { |

| 10 | unsigned int frames = MIN(frames_left, ad->buffer_frames); |

| 11 | ad->audio_server_process(frames, ad->samples_in.ptrw()); |

| 12 | |

| 13 | for (unsigned int j = 0; j < frames * ad->channels; j++) { |

| 14 | out[j] = ad->samples_in[j] >> 16; |

| 15 | } |

| 16 | |

| 17 | frames_left -= frames; |

| 18 | out += frames * ad->channels; |

| 19 | }; |

| 20 | }; |

| 21 | ... |

| 22 | return 0; |

| 23 | }; |

Audio Engines

- FMOD Engine - integrated in Unity (via plugin) and Unreal

- WebAudio API - node-based API for sound processing in web browsers

- RealSpace 3D Audio - pin-point accurate engine, supported by Unreal

- VRWorks - raytraced sound rendering by NVidia

- Audiokinetic Wwise - feature-rich interactive engine

- Windows Sonic - HRTF-based spatialization for Windows and HoloLens

- Project Acoustics - complete auralization system, combines HRTF with Project Triton engine

Audio engine pipeline

VRWorks

- path-traced geometric audio from NVidia

- based on Acoustic Raytracer Technology

- uses GPU to compute acoustic environmental model

- supported by Unreal Engine

WebAudio API

- high-level JavaScript API for audio processing

- features: modular routing, spatialized audio, convolution engine, biquad filters,...

- spatialized audio (distance attenuation, sound cones, obstruction, panning models)

- convolution engine (cathedral, cave, tunnel)

- audio operations are performed with audio nodes that are linked together

Node setup example

Lecture Summary

- I know what PCM is and how buffer size affects the gameplay

- I know 4 basic types of synthesis

- I know basic waveforms of sound generators

- I know categories of audio assets in games

- I know features of dynamic music (looping, branching, layering, transitions)

- I know what attenuation, occlusion and obstruction in 3D sound are

Goodbye Quote

He who controls the past commands the future. He who commands the future conquers the past.Kane, C&C Red Alert